This article is part of our reviews of AI research papers, a series of posts that explore the latest findings in artificial intelligence.

Since they made their first appearance in 2017, deepfakes, an artificial intelligence technology that can swap faces in videos, have been a constant source of controversy and concern. How serious a threat are they?

It depends on who you ask. Lawmakers are increasingly worried that the AI-doctored videos will unleash a new wave of fake news and soon become a serious national security concern, especially as the 2020 U.S. presidential elections draw near. Others believe deepfakes are being given more credit than it’s their due, and they will remain a tool for creating non-consensual porn and shaming celebrities, not a weapon to influence politics and public opinion

But regardless on differing views regarding deepfakes, fake news and politics, almost everyone agrees that AI-forged videos are becoming easier to create and harder to detect.

To protect the public, several companies and research labs have engaged in efforts to develop technologies that can detect AI-forged videos. However, the process is a cat-and-mouse game, and as the technology becomes better and better, detecting deepfakes will become harder and perhaps impossible one day.

But here’s a different approach to protect against deepfakes and other AI-based forgeries: Instead of trying to detect what’s fake, prove what’s real. This is the idea behind Archangel, a project by researchers at UK’s University of Surrey.

Archangel is a tamperproof database that stores digital fingerprints of video files. And interestingly, it uses machine learning, the technology that is at the heart of deepfakes.

Fingerprinting videos with artificial intelligence

Publishers of online content, sender of email and other people who work with digital documents use digital signature to protect the integrity of their files. Digital signatures use encryption keys and hashing algorithms to prove the provenance of a binary document. Hashing algorithms are mathematical functions that process the entire contents of a file and produce a unique string of bytes.

The hashing algorithms will produce identical results as far as the file’s contents don’t change. But if a single byte in the file is modified, the hash will become totally different. This is perfect for protecting say text documents and executable files against tampering.

But videos are quite different. A single video can be transcoded in different formats, resolutions and frame rates, which will change its binary structure but not its content. But a classic hashing algorithm will not be able to tell that two videos contain the same content if their binary structure is different.

“We wanted the signature to be the same regardless of the codec the video is being compressed with. So if I use a standard cryptographic hash like SHA256 or MD5, if a single bit in that file changes, the hash is going to be completely different. If I take my video and convert it from MPEG-2 to MPEG-4, then that file will be of a totally different length, and the bits will have completely changed. But the content will be the same. What we needed was a content-aware hash, not a bit-level hash,” says John Collomose, professor of computer vision at the University of Surrey and project lead of Archangel.

To create this content-aware hash, the researchers used neural networks, an AI construction that has become very popular in recent years. Neural networks are especially good at computer vision tasks such as image classification.

Archangel’s AI model trains a neural network on the frames of the video it wants to protect. The neural network then becomes accustomed to the content of the video and acts as the digital fingerprint of the video. The AI will be able to tell whether the contents of another video is another format of the original file or a tampered version.

“The network is looking at the content of the video rather than its underlying bits and bytes,” Collomosse says.

The AI model will be able to detect both spatial and temporal tampering. Spatial tampering is modifications made to individual frames. By looking at the frames, the AI will be able to detect changes such as deepfake face-swaps or objects that have been added to or removed from the scene.

Temporal tampering is different but no-less dangerous than deepfakes, and includes changes made to the sequence and length of frames. For instance, someone might intentionally remove small parts of a speech video to change its meaning, or change the speed of the video to achieve a desired effect.

A stark example of temporal tampering was a recent video of U.S. House Speaker Nancy Pelosi widely distributed on social media. The video had simply changed the speed of playback (a technique that has become known as “cheapfakes”) to make her appear confused and tired.

“One of the forms of tampering the AI can detect is removal of short segments of the video. These are temporal tampers. Archangel can detect temporal tampering of three seconds or more. So, if you just remove three seconds of a video that is several hours long, the AI will detect that,” Collomosse says.

One neural network per video

One of the unique characteristics of Archangel’s AI model is that it requires one AI model per video. Every time a video is added to the Archangel archive, a new neural network is created.

“We’re using a regular neural networks, or ResNet, which is commonly used for object detection to pull some descriptors out. But then we use some neural network of our design and take those descriptors and do the tamper detection. It’s a combination of pre-trained network and a new neural network we presented in the paper,” Collomosse says.

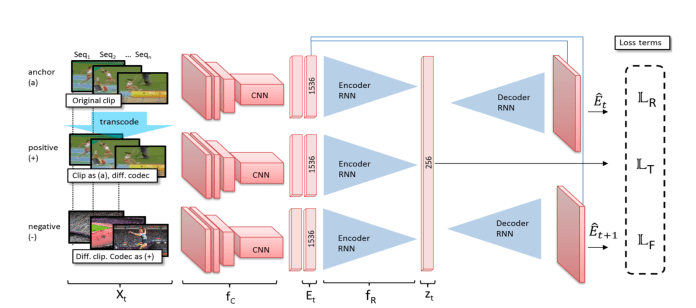

The AI model is called a triplet network. The AI first trains on the original video, called the anchor. And then it examines positive examples, which are the same video, but transcoded in different formats. Next, it analyzes negative examples, videos that are different from the original or tampered versions of the original video. After the training, the neural network becomes exclusively tailored to the original video and can detect legitimate and forged versions of it.

Interestingly, in machine learning jargon, when an AI model becomes too tailored to its training data, it is called to be “overfitted,” a practice that is viewed negatively. But in the case of Archangel, the creators intentionally wanted to overfit their models to each video.

“We thought about, could we train a single neural network to protect all the videos in the archive? But that’s not a good idea, because it’s hard to create a model that will be representative of everything you might encounter now and in the future,” Collomosse says.

Such an AI model can also become vulnerable to adversarial attacks, videos carefully crafted to fool neural networks, Collomosse says.

“Overfitting is a dirty word sometimes when you’re talking about machine learning. In this case, what we try to do is exactly that: We overfit a network specifically to that video. The advantage is that we get unique protection specific to that video,” Collomosse says.

But the disadvantage, he points out, is that Archangel must store a separate neural network alongside each video in the archive. This adds both a storage and a training overhead. The storage isn’t not much of a problem, since each AI model is around 100MB, a fraction of the actual video.

But training the AI can pose a problem. It takes several hours to train a neural network for a single video, which can limit its use in settings where videos are created and registered very rapidly.

Storing the AI fingerprint on the blockchain

One you have your content-aware, AI-powered digital fingerprint, the next problem will be to store this fingerprint in a way that is both trustable and resistant to tampering. Naturally, giving a single party or entity the power to define what is true would put too much trust in that party and make the system prone to abuse and cyberattacks.

That’s why Archangel has opted to use a blockchain to store its digital fingerprints. Blockchain is a distributed ledger of transactions that can store information in a transparent and irreversible way. The blockchain is maintained and updated by several independent parties that don’t necessarily trust each other. Each of them holds a copy of the ledger and the majority of them must agree before registering new records. Blockchain is the technology that underlies cryptocurrencies.

However, Archangel’s blockchain is not supported by a digital currency or token. Records are validated by proof of authority instead of proof of work, the dominant consensus mechanism of cryptocurrency blockchains. Arhcangel’s blockchain is purely meant for registering information about videos. Blockchains are notoriously bad for storing large files, that’s why the actual video file and the neural network are stored off-chain and only their identifiers and cryptographic hashes are stored on the blockchain.

Also, Archangel’s blockchain is permissioned, which means unlike bitcoin, it’s not public. Only a limited number of predefined nodes maintain and update the ledger. Surrey is currently trialing the platform with a network of National Archives from the UK, Estonia, Norway, Australia, and the U.S. At least half of the participants must approve every record before it is added to the blockchain and propagated across the network.

But the information stored on the blockchain is accessible to the public. While only National Archives can add new records, anyone can use the information stored on the blockchain and the AI models to validate a video against the archive.

“This is an application of blockchain for the public good,” Collomosse says. “In my view, the only reasonable use of the blockchain is when you have independent organizations that don’t necessarily trust one another but they do have this vested interest in this collective goal of mutual trust. And what we’re looking to do is secure the national archives of governments all around the world, so that we can underwrite their integrity using this technology.”

Archangel shows that with AI and blockchain, you no longer need to chase deepfakes. You have a point of reference where you can register the truth and validate unknown videos against. Naturally, you can’t store everything on Archangel but at least, it provides a tool to protect videos on people who are more at risk of being targeted with deepfakes and other forms of forgery. Maybe one day, the technology will become fast and reliable enough to provide every person with their AI-protected video archive.

“I think deepfakes are almost like an arms race. Because people are producing increasingly convincing deepfakes, and someday it might become impossible to detect them. That’s why the best you can do is try to prove the provenance of a video,” Collomosse says.