This article is part of Demystifying AI, a series of posts that (try to) disambiguate the jargon and myths surrounding AI.

In recent weeks, there has been a lot of conversation about Model Context Protocol (MCP). Anthropic introduced it in November 2024 as an open source standard to connect large language models (LLMs) to external sources and tools. Since then, hundreds of projects have been built on top of MCP, OpenAI adopted it in March 2025, and there is a lot of excitement around what it can do for LLM applications, especially for AI agents.

But what is MCP and how can you prepare your applications and infrastructure for agentic frameworks?

Why MCP?

Alone, LLMs can only act as information machines that respond to prompts based on the knowledge they obtained during training. This is extremely useful for many tasks but limited when you want to build LLM applications that must rely on external information or take action in their environment.

Before MCP, there were a lot of ad-hoc approaches to integrating information and tools into LLMs. For example, if you wanted your model to interact with your Google Drive or database, you had to write the code and logic to call the API, extract information, and add it to the model’s context. If you wanted to add another tool, say a web search feature, you had to revisit the code, do the integrations, and change the logic to decide when to use Google Drive, search, or both.

The idea behind MCP is to simplify the interface between the LLM and external tools. MCP acts as an abstraction layer that helps the model discover external tools and their functionality. At inference, the model automatically decides which tools to use based on its prompt and context.

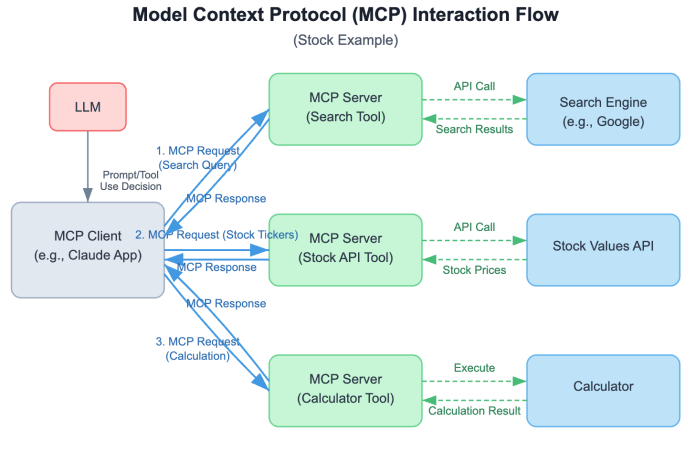

For example, the LLM might be given access to a search engine, an API for stock values, and a calculator through MCP. The user might prompt the model to calculate the gains from investing in the top-10 performing stocks since the beginning of the year. The model will then decide that 1) it needs to get a list of top-performing stocks from January to March, 2) get the price information for those stocks since the beginning of the year, and 3) calculate the difference between the price of the stock from January 1 to the latest date. Based on this reasoning, it will use the search engine to accomplish (1), use that information to call the stock API and accomplish (2), and use the calculator to compare stock prices and accomplish (3).

Without MCP, the developer would have to predetermine the different ways the user would prompt the model and create fixed program flows to address those use cases. With MCP, you provide the LLM with a list of tools and their specifications and let the model decide which ones to use and in which order based on the user’s request. This is a much more flexible approach and allows for countless ways to combine tools without the need for fixed programming logic.

This is becoming increasingly feasible thanks to advances in large reasoning models (LRMs), which can “think” and plan before generating the answer to the user’s prompt. LRMs are much better equipped to choose and use tools at inference time.

What are the components of MCP?

For MCP to work properly, several elements need to be coordinated:

MCP server: This is an application that exposes the features of external tools through the MCP protocol. For example, you can have an MCP server for a search engine, which provides a search function that takes as input the query and number of desired results. It sends the query to the search engine API (e.g., Google Search of Brave Search) and returns the results as a formatted block of data.

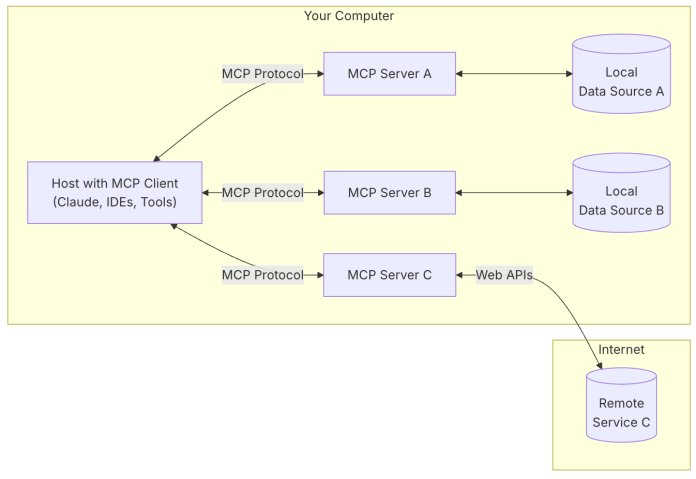

MCP client: This is an application that connects the LLM to the MCP servers. The best example is the Claude desktop application, which has a settings file where you can register the information of the MCP servers that the model can access during inference. The application automatically adds tool descriptions to your prompts and parses the model’s responses to decide which ones require sending requests to MCP servers. Alternatively, you can create your own MCP clients that connect LLMs to servers.

The other two components of the MCP cycle are the LLM and the tools themselves. The LLM can be an API-based model such as Claude or the GPT series, or it can be an open-source model hosted on your own device or local server. The tools can also be hosted on your own computer (e.g., local file system, browser, local database) or in an online server (e.g., search engine, cloud-based database, GitHub, Slack).

MCP supports various transport mechanisms, including STDIO (standard input/output) for local processes and HTTP with Server-Sent Events (SSE) for web-based communication, offering flexibility in different deployment scenarios.

How to prepare for MCP?

Subscribe to continue reading

Become a paid subscriber to get access to the rest of this post and other exclusive content.