OpenAI finally released GPT-4.5, the successor to GPT-4o, on February 27. And to put it mildly, the model was… underwhelming.

GPT-4.5 is the largest model OpenAI has developed. While there is very little information about the model’s architecture and training data, we know that the training was so intensive that it required OpenAI to spread it across several data centers to get it done on time.

The model’s pricing is insane, 15-30X more expensive than GPT-4o, 3-5X compared to o1, and 10-25X in comparison to Claude 3.7 Sonnet. It is currently only available to ChatGPT Pro users ($200 per month) and API clients who will pay for access on a per-token basis. Meanwhile, the model doesn’t show impressive results, with modest gains over GPT-4o and lagging behind o1 and o3-mini on reasoning tasks.

To be fair, OpenAI did not market GPT-4.5 as its best model (in fact, an initial version of its blog post stated that it was not a “frontier model”). It is also not a reasoning model, which is why the comparisons against models such as o3 and DeepSeek-R1 might not be fair. According to OpenAI, GPT-4.5 will be its last non-chain-of-thought model, which means it has only been trained to internalize world knowledge and user preferences.

What is GPT-4.5 good for?

Bigger models have a larger capacity to learn knowledge. GPT-4.5 hallucinates less often than other models, making it suitable for tasks where adherence to facts and contextual information might be very crucial. It also shows better capacity to adhere to user instructions and preferences, as shown by OpenAI’s demos and experiments shared by users online.

There is also a debate over whether it can generate better prose. OpenAI execs have certainly been praising the model for the quality of its responses. OpenAI CEO Sam Altman said, “trying GPT-4.5 has been much more of a ‘feel the AGI’ moment among high-taste testers than i expected!”

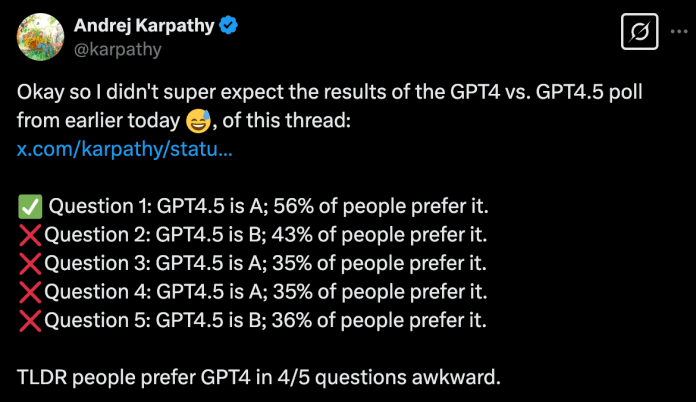

But online reaction has been mixed. Andrej Karpathy, AI scientist and OpenAI co-founder, said he “expect to see an improvement in tasks that are not reasoning heavy, and I would say those are tasks that are more EQ (as opposed to IQ) related and bottlenecked by e.g. world knowledge, creativity, analogy making, general understanding, humor, etc.”

However, a later survey he ran on outputs, users generally preferred GPT-4o’s answers over GPT-4.5. Writing quality is subjective, and it is likely that with the right prompt engineering techniques and tweaks, you can get a much smaller model to get the quality output you need.

As Karpathy said, “Either the high-taste testers are noticing the new and unique structure but the low-taste ones are overwhelming the poll. Or we’re just hallucinating things. Or these examples are just not that great. Or it’s actually pretty close and this is way too small sample size. Or all of the above.”

Was GPT-4.5 worth it?

In some ways, GPT-4.5 shows the limits of the scaling laws. During a talk at NeurIPS 2024, Ilya Sutskever, another OpenAI co-founder and former chief scientist, said, “Pre-training as we know it will unquestionably end… We’ve achieved peak data and there’ll be no more. We have to deal with the data that we have. There’s only one internet.”

The diminishing returns of GPT-4.5 is testament to the limitations of scaling general-purpose models that are pre-trained on internet data and post-trained for alignment through reinforcement learning from human feedback (RLHF). The next step for LLMs is test-time scaling (or inference-time scaling), where the model is trained to “think” longer by generating chain-of-thought (CoT) tokens. Test-time scaling improves the capability of models to solve reasoning problems and is the key to the success of models such as o1 and R1.

However, this doesn’t mean that GPT-4.5 was a failure. A strong knowledge foundation is necessary for the next generation of reasoning models. While GPT-4.5 per se might not be the go-to model for most tasks, it can become the foundation for future reasoing models (and might already be used in models such as o3).

As Mark Chen, OpenAI’s Chief Research Officer, said in an interview following the release of GPT-4.5, “You need knowledge to build reasoning on top of. A model can’t go in blind and just learn reasoning from scratch. So we find these two paradigms to be fairly complimentary, and we think they have feedback loops on each other.”