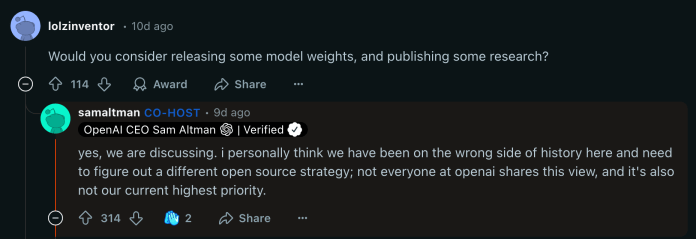

OpenAI CEO Sam Altman recently said in a Reddit AMA that he believed the company was on the wrong side of history when it came to open-sourcing their research.

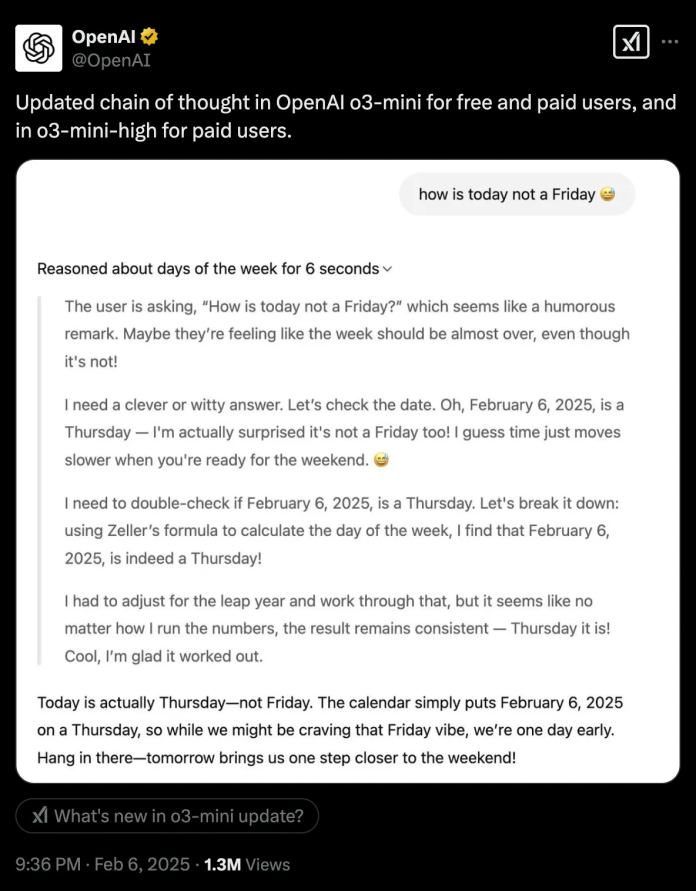

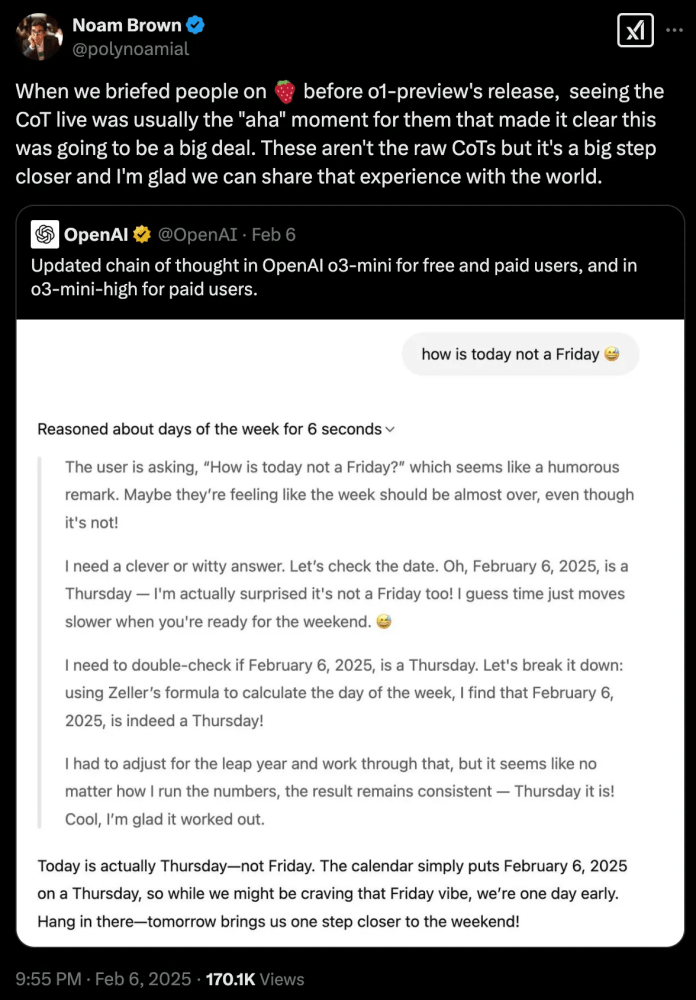

OpenAI has yet to release open models, but it has taken what could be the first step toward more transparency. o3-mini, its latest reasoning model, now shows a more detailed version of its chain-of-thought (CoT) trace, as announced by OpenAI’s X account.

Previously, OpenAI’s reasoning models only showed a high-level overview of the CoT, which made it difficult for developers to understand the model’s reasoning logic and adjust their prompts.

OpenAI hides the CoT to prevent rivals from using it to train their models. But after the release of DeepSeek-R1, which shows all its CoT tokens, OpenAI has been hard-pressed to maintain its competitive advantage.

The recently implemented changes show a more detailed version of the CoT but not the raw reasoning tokens, giving OpenAI a balance between being more transparent and revealing its secret sauce (if there is one).

Here is why showing the CoT is very important. In my previous side-by-side experiments, I found that o1 is slightly better than R1 in processing noisy data from the web. However, since it did not reveal its CoT, it was very difficult to troubleshoot its errors (and both models make errors when they’re not solving cute problems). On the other hand, R1’s transparency made it an overall better model for real-world applications.

For example, in one of the failed experiments, R1’s CoT helped me find out that the problem was not with the model itself but with the retrieval component that was obtaining the data (this kind of problem happens a lot in real applications). On the other hand, o1 only gave me a wrong answer and a vague reasoning chain.

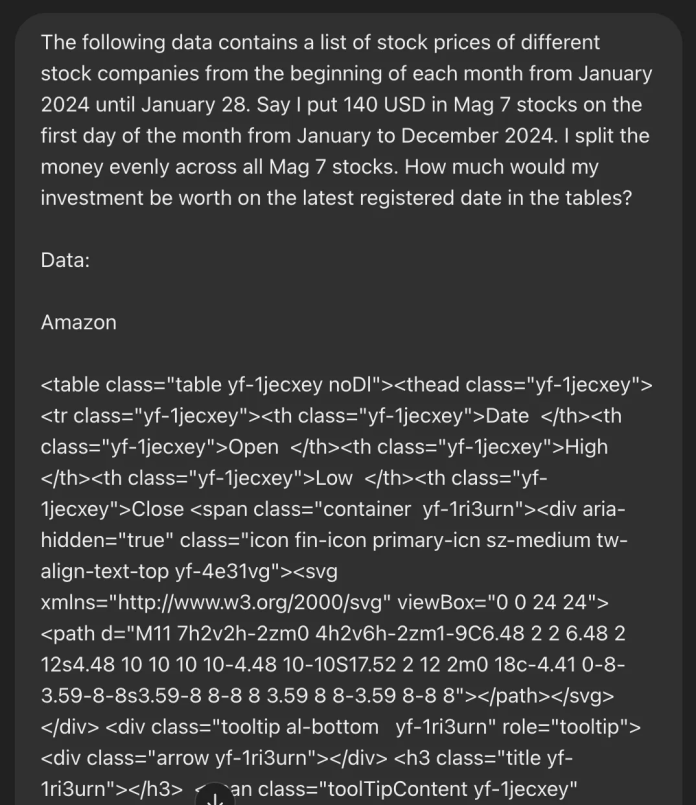

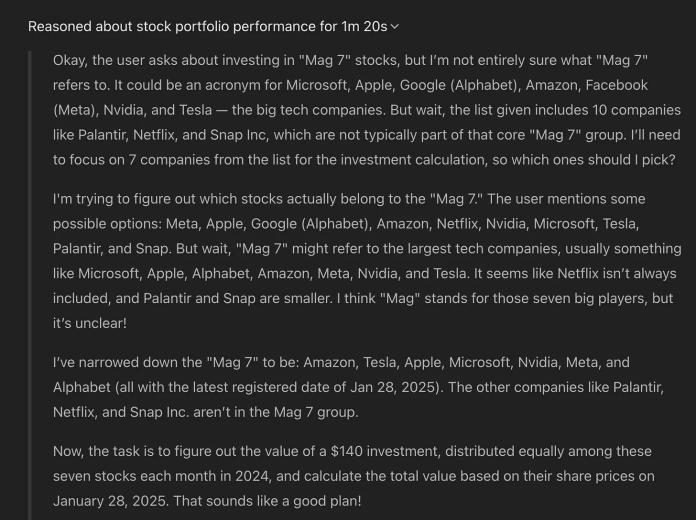

I tested o3-mini on a problem where I provided the model with a file containing historic stock price data from 2024 to 2025 and asked it questions that required reasoning and data analysis (since ChatGPT o3-mini does not support file attachments, I had to paste the contents inside the prompt). The model was supposed to calculate the value of a portfolio that invests $140 in the Magnificent 7 stocks every month. The file contains stock data grabbed from Yahoo Finance. It is noisy, containing both plain text and HTML elements and includes both Mag 7 and non–Mag 7 stocks.

I found the new detailed CoT to be very helpful. I could track the model reasoning about which stocks constitute Mag 7, which stocks are in the file, which of them should be ignored, how to spread the investment across stocks, how to grab data for each stocks value at the beginning of each month, etc. And it provided me with a detailed answer that explained the process and the final value of the portfolio.

DeepSeek-R1 had three clear advantages over OpenAI’s reasoning models: It was open, cheap, and transparent. OpenAI has managed to catch up a bit with the release of o3-mini.

o3-mini costs $4.40 per 1 ouptut million tokens, down from $60 for o1, while outperforming o1 on many reasoning benchmarks. R1 costs around $7-$8 per million tokens on U.S. providers. (DeepSeek offers R1 at $2.19 per million tokens on its own servers, but many organizations will not be able to use it because it is hosted in China.)

The changed CoT output has also helped OpenAI catch up on transparency (almost). But R1 is fast becoming a standard for reasoning models as more and more cloud providers integrate it into their offerings and model builders create derivatives from it. It remains to be seen whether OpenAI will make a change in its policy to keep its models closed.