Large language models (LLM) require huge memory and computational resources. LLM compression techniques make models more compact and executable on memory-constrained devices.

The two main types of machine learning categories are supervised and unsupervised learning. In this post, we examine their key features and differences.

Neural architecture search NAS is a series of machine learning techniques that can help discover optimal neural networks for a given problem.

The main takeaway from the buildup of developments in the cybersecurity landscape is that privacy is becoming a commodity. The CIA is spying on your phone. Hackers are breaking into your home. Your documents, emails,...

Google's new A2A framework lets different AI agents chat and work together seamlessly, breaking down silos and improving productivity across platforms.

Recent advances in technology, especially artificial intelligence and automation, coupled with growing social inequality and a shrinking middle class, have given prominence to Universal Basic Income.

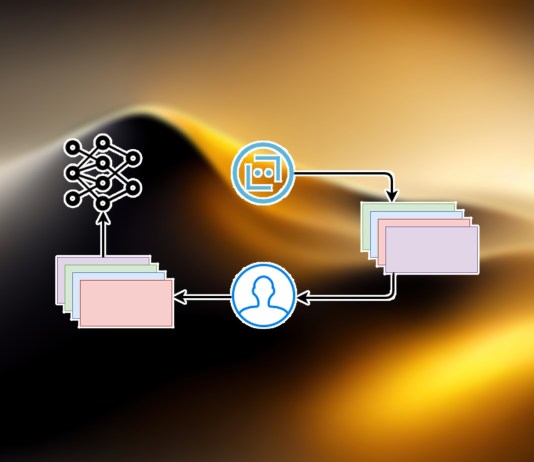

Reinforcement learning from human feedback (RLHF) is the technique that has made ChatGPT very impressive. But there is more to RLHF that large language models (LLM).

OpenAI o1 and o3 are very effective at math, coding, and reasoning tasks. But they are not the only models that can reason.

We rely increasingly on messaging apps to carry out our daily communications, whether for personal use or to do business. And there are literally tons of them on the internet and app stores, each sporting...

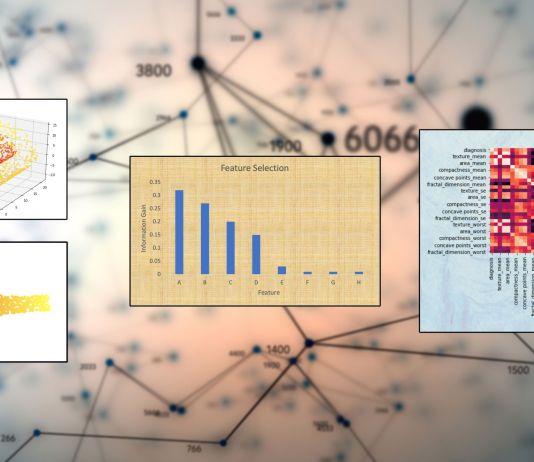

Dimensionality reduction slashes the costs of machine learning and sometimes makes it possible to solve complicated problems with simpler models.